This case study has been authored by Naveen K M

The Challenge

For a robust and effective CI/CD process for UI development (ReactJS / Redux apps), the additional capabilities required is primarily around cross-browser testing, accessibility testing, and multi-resolution testing. Many of these are manual steps implemented by Test Engineers, and it slows down the code deployment to production, even if a micro service / micro front end-based application is being developed. This case study talks about one such challenge that my team at Sabre faced, and I will share the solution that we came up with to overcome this.The Opportunity

The primary objective of the solution is to automate various UI aspects of testing and find the best open source tool which can best fit into the project timelines. Below are various factors considered to arrive at the solution –- Create reusable code for infrastructure set-up for UI testing in the Cloud

- Develop a library for automation of cross-browser and multi-resolution testing, and update test cases repository and test results via code

- Identify a repeatable way to test accessibility standards for all UI-based applications

- Continuously measure and report page load performance scores, and make it extendable to include the new pages when developed

- Provide a centralized dashboard to collate all test results and reports of multistage-pipeline, across various microservices / pages into a single view

- Utilize only open source technologies and reuse Sabre recommended or licensed libraries/tools/frameworks

The Solution

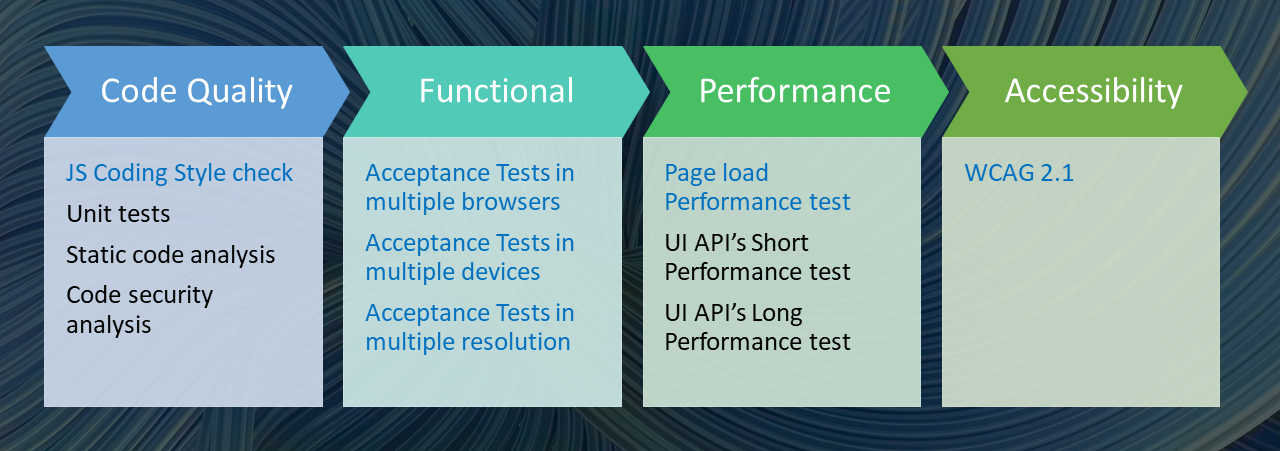

The diagram below summarizes the considerations for what all should be automated, tested, and signed-off, as part of the continuous integration and deployment, before the code is ready to be deployed to higher environments such as UAT or Production.

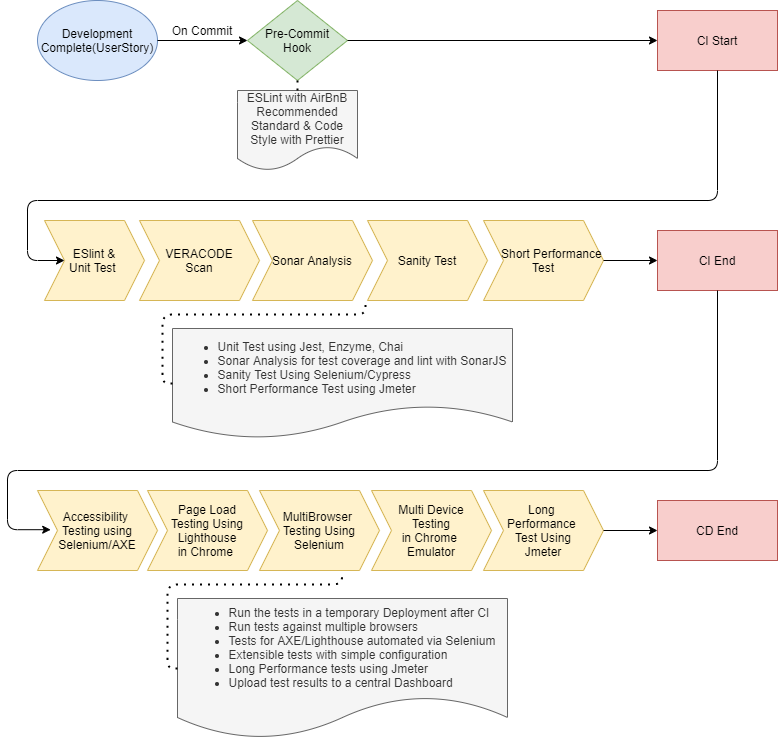

Let’s deep dive into all of them, and see how an automated pipeline was implemented for each of these – Test Environment Typically, CI/CD pipelines are hosted out of Linux-based environments. It’s a challenge to simulate UI testing with different browsers like IE and Edge, which are not supported in a Linux-based OS. Also, many of these testing use cases need to have browsers launched in the machine, and cannot have accurate test results with headless browser testing. To overcome this, the team created AWS Cloud formation scripts to launch a Windows server in AWS. The cloud formation scripts include the creation of Windows machine, installation of required open source software like Jenkins, Jenkins plugins, GIT, browsers, UI testing libraries, and scripts to establish connectivity to the Sabre domain. By doing this, as a cloud formation script, it is ensured that even the infrastructure set-up is automated. This helps any product team to turn around a new Windows box for CI/CD purposes, within the matter of a few seconds. The required firewall settings are also included in the cloud formation script, to allow the minimum needed connectivity (inbound/outbound). CI/CD Pipeline The various elements of testing are knitted together into different steps as depicted in the flowchart below:CI/CD pipeline elements. The ones in blue are specific to UI development

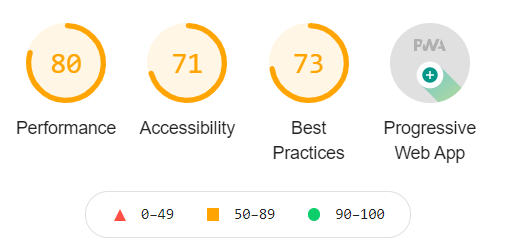

♦ Code Quality Checks Code quality is at the heart of any development project, and it supports developers to maintain clean code. The various aspects of good quality code include coding style, code reliability, secure & maintainable code – Coding Style Check with Git Hooks The team developed a git hook which will do an Auto Lint & Format on Git Commit with Airbnb style guide. This ensures that all the code in the codebase will look like a single person coded it, no matter how many people contributed. A similar git hook was also developed for backend code which checks the Java code style using Google style of java code. Unit Tests The team did a proof-of-concept on many JavaScript-based unit test frameworks. Based on the evaluation, a combination of Jest/Enzyme was zeroed in as the best suite for ReactJS based UI apps. The unit tests will run as part of every build, and this will form the input for Sonar coverage metrics too. Static Code Analysis Sabre has a well-established Bitbucket integration with Sonar and the team decided to reuse this for JS code analysis. The team developed a custom hook in bitbucket and for every pull request made in bitbucket, it will trigger a Sonar analysis. The sonar analysis checks for code coverage, reliability, security and maintainability. If the quality gates are not passed for the new/changed code, the pull request cannot be merged with the main line branch. The Jest/enzyme code coverage report generated as part of the build was plugged into Sonar for reporting the coverage metrics. Code Security Analysis The static JS code analysis for security was done by adding a pipeline step to trigger Veracode scan of the code. This CI step includes zipping of the required JS files and initiating a Veracode scan. The results of Veracode scan is visible through the Veracode website or is available via email notification. The team will create a list of defects arising out of Veracode scan and fix the vulnerabilities in a timely manner. A successful CD can be only be triggered upon producing a 100% clean Veracode report. ♦ Functional Testing Acceptance Tests in Multiple Browsers / Devices / Resolutions The core aspect of any UI testing exercise is to automate testing of cross-browsers, devices and form factors effectively. After doing POCs on various open source software available, the team zeroed in on Selenium web driver-based testing. A re-usable library was developed based on the Selenium web driver which takes minimal configuration as input to run the tests in multiple browsers, devices, and while setting up the required resolution or form factor. The various tests also can be configured to run at different times of the day and send the automated reports to pre-defined email-IDs. The team also conducted research to identify the most used browsers and most widely used resolution, to set-up as defaults, with the ability to extend for other configurations too. The library will also update the test cases in Rally via API Integration and will update the test results in Rally too. There is also a provision for auto creation of defects in Rally, if a test case fails and this is under development currently. ♦ Performance Testing Page Load Performance Test It’s very critical to measure the page load time of UI applications and benchmark it against accepted industry standards. This provides a realistic check on what the end user is experiencing with each page. Lighthouse is an open-source, automated tool available in ‘Chrome DevTools’ which audits performance, accessibility, progressive web apps, SEO and more. Each audit provides a score out of 100 for these aspects and an indicator on how good or bad the page is performing, like shown below –CI/CD pipeline Elements and Solution

The team developed a framework with NodeJs, Selenium on top of Lighthouse, to simulate the auditing of each page and the CI tests would only pass if the score is 90 or above. The tests are so scalable that if one develops a new page, adding this to the list of pages to be audited, is just a simple configuration step. Also, it can be used by all other UI apps too. The framework developed also helps in auditing of complex model pop-up load times as well. The audit information data is also pushed to the central dashboard via API and the reports are made available in .csv format as well.

APIs Short Performance & Long Performance Test

While Lighthouse-based testing is focused at UI, much of the performance is based on how quick the APIs responds with the first byte. Jmeter based scripts are developed iteratively to test the API response time under load and they are run for shorter and longer periods, as part of CI & CD.

♦ Accessibility Testing

WCAG 2.1

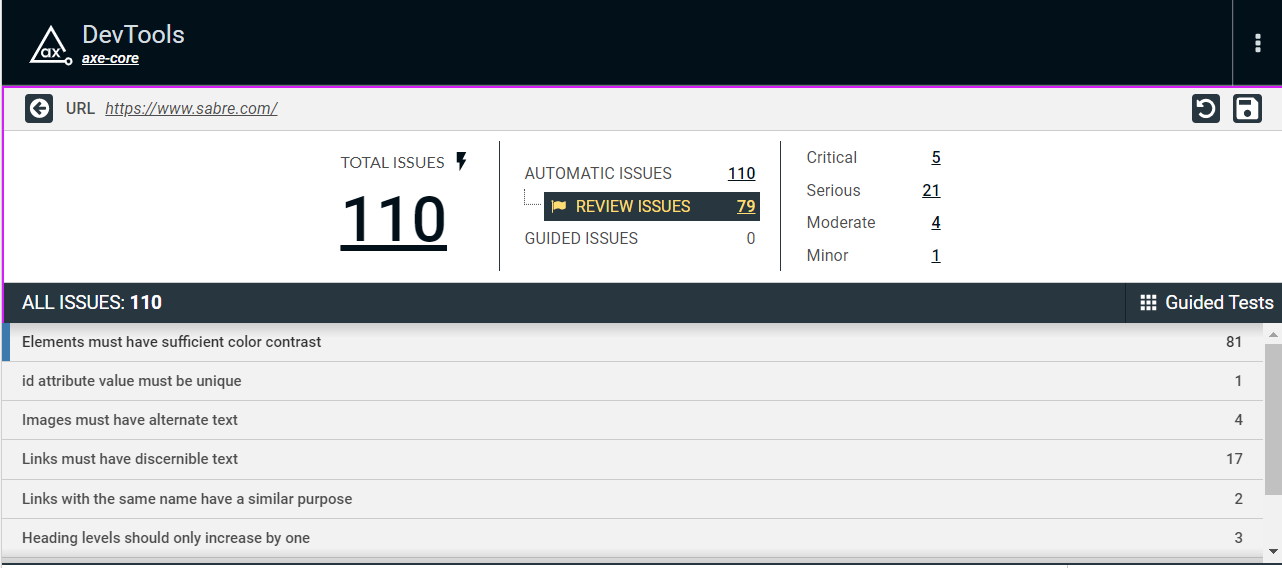

In 2020, Sabre started an initiative to ensure that all products and applications must adhere to the Digital Accessibility Policy and be compliant at the WCAG 2.1 level. A full automation of WCAG 2.1 is still not possible, but the team’s idea was to get to 40-50% automation on Accessibility testing and integrate it as part of Continuous Deployment.

The team created a framework on Nodejs, Selenium utilizing Axe Browser Plugin, which is an open source accessibility testing widget. The framework developed works exactly how Lighthouse audits are automated and the CI tests will pass only if the page has zero critical, serious, moderate and minor issues.

The team developed a framework with NodeJs, Selenium on top of Lighthouse, to simulate the auditing of each page and the CI tests would only pass if the score is 90 or above. The tests are so scalable that if one develops a new page, adding this to the list of pages to be audited, is just a simple configuration step. Also, it can be used by all other UI apps too. The framework developed also helps in auditing of complex model pop-up load times as well. The audit information data is also pushed to the central dashboard via API and the reports are made available in .csv format as well.

APIs Short Performance & Long Performance Test

While Lighthouse-based testing is focused at UI, much of the performance is based on how quick the APIs responds with the first byte. Jmeter based scripts are developed iteratively to test the API response time under load and they are run for shorter and longer periods, as part of CI & CD.

♦ Accessibility Testing

WCAG 2.1

In 2020, Sabre started an initiative to ensure that all products and applications must adhere to the Digital Accessibility Policy and be compliant at the WCAG 2.1 level. A full automation of WCAG 2.1 is still not possible, but the team’s idea was to get to 40-50% automation on Accessibility testing and integrate it as part of Continuous Deployment.

The team created a framework on Nodejs, Selenium utilizing Axe Browser Plugin, which is an open source accessibility testing widget. The framework developed works exactly how Lighthouse audits are automated and the CI tests will pass only if the page has zero critical, serious, moderate and minor issues.

The tests are scalable and a new page can be added easily, and can audit complex model pop-ups as well. The framework is reusable across UI apps too. The audit information data is also pushed to the central dashboard via API and the reports are made available in .csv format too.

Executive Dashboard

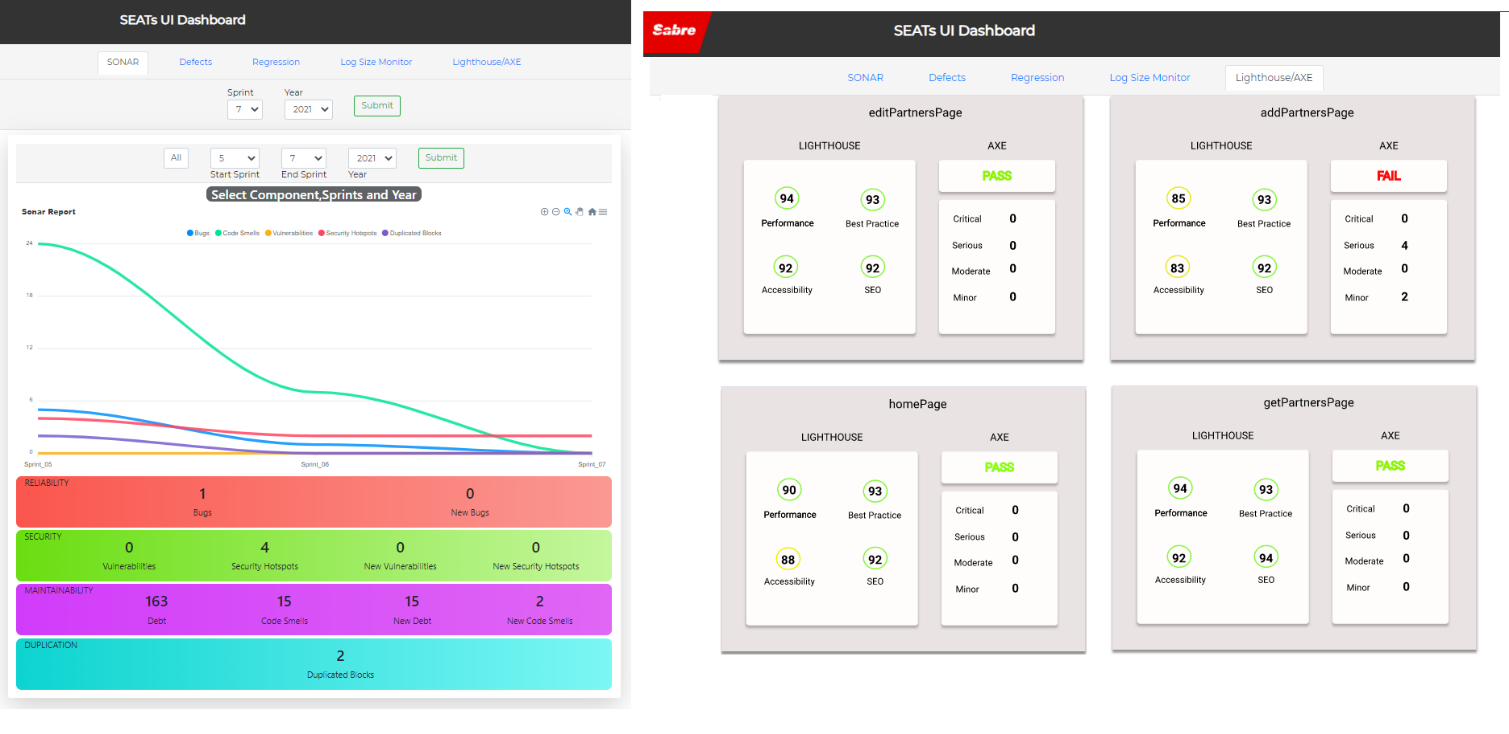

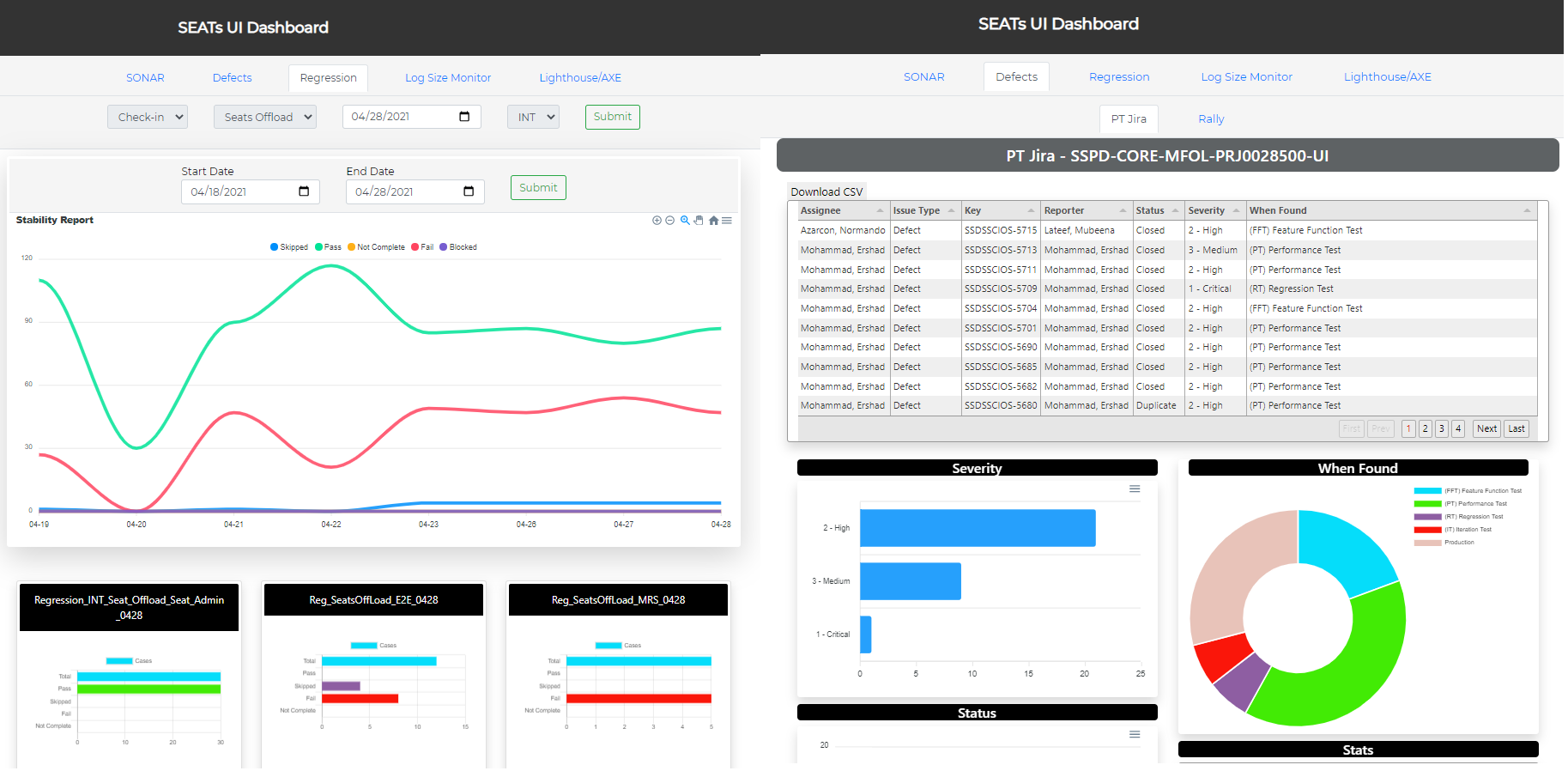

While automating, all the steps are indeed necessary to ensure that the feedback is obtained in the development cycle. It is equally important to have all the information available in a single dashboard that gives metrics of all microservices in a single pane. Such a dashboard was developed by the Central QA Team and in addition to providing the metrics at any given point of time, it also captures a trend of how different tests have fared sprint-over-sprint. This also gives the executive leadership an easy view of the overall product development quality, in just a few clicks.

The tests are scalable and a new page can be added easily, and can audit complex model pop-ups as well. The framework is reusable across UI apps too. The audit information data is also pushed to the central dashboard via API and the reports are made available in .csv format too.

Executive Dashboard

While automating, all the steps are indeed necessary to ensure that the feedback is obtained in the development cycle. It is equally important to have all the information available in a single dashboard that gives metrics of all microservices in a single pane. Such a dashboard was developed by the Central QA Team and in addition to providing the metrics at any given point of time, it also captures a trend of how different tests have fared sprint-over-sprint. This also gives the executive leadership an easy view of the overall product development quality, in just a few clicks.

Sonar Metrics for all Microservices over multiple sprints (left). Lighthouse/Axe metrics for all pages

Regression Test Metrics for all Microservices over time (left). Defect metrics from JIRA/Rally (Right)

The Impact

At Sabre, teams are continuously looking modernizing systems and applications. In this context, having an end-to-end CI/CD pipeline taking care of all UI aspects of testing is very much needed, and multiple scrum teams can benefit from reusing the solution described above. Having such automation capabilities available right at the beginning of a multi-year development project is very effective in getting early feedback and reducing the overall cost and manual efforts. In addition to all of these, having all of the various results available in a single dashboard provides the much-needed executive overview, right from the word go. This is now utilized by multiple teams in Tech transformation within Sabre and is helping them proactively make improvements.CREDITS:

- Development – Rajesh Samal, Nitin Singla, Hemachandra Mutharasi

- Architecture overview – Suresh SG

- Central QA Team – Shalini Thillainathan, Koushik J, Gaurav Reddy

- Concept, Mindmap, and Engineering Leadership – Naveen K M